Hi all,

Are the Level-3 Processors for Sen2Agri Version 1.8 already available and should they start automatically if they are enabeled in my site and L-2A Processing is already advanced?

Because in my case the are not starting:confused:

Martin

Hi all,

Are the Level-3 Processors for Sen2Agri Version 1.8 already available and should they start automatically if they are enabeled in my site and L-2A Processing is already advanced?

Because in my case the are not starting:confused:

Martin

Hello,

Could you provide information about the season you use?

Is it a season from the past years or is a real-time season?

Normally, these automatic processors start as predefined Scheduled Jobs and have the Start type in the Job History as “Scheduled” (I see in the screenshot that there are Canceled).

Nevertheless, in the case of processing the seasons from the past, there is an issue in the current implementation of the system when using automatic season schedules (when you select the products to be created when you create the season).

The problem with the automatic processors defined at season creation is that they need to execute at some moments in the past, and considering T the date in the past when they need to execute, they need the products from T - 30 days (in the case of Composite) and T - 10 days (in the case of LAI).

Unfortunately, contrary to the real-time processing (when at the T date all L2A products are available for the desired interval), for past reprocessing the processors are triggered when at least one L2A product becomes available so not all L2A products from the desired interval might be used in the processing.

For past reprocessing I would recommend not to add automatic processors when processing past seasons but to add Scheduled jobs from Dashboard for a certain interval when you know the L2A products are available or to use custom jobs.

To be sure you process everything is better to wait for L2A processor to finish and then to add a periodic Scheduled Job that does all the work.

If not, you can add “Once” scheduled jobs that process strictly an interval.

Hope this helps.

Best regards,

Cosmin

Hi,

ah ok this explains already why the do not start automatically. Because I enabled the L3A-option at the beginning when I created the site. But I think it’s not possible to deselect it again?

my season is only past-processing and considers only data of 2017.

I cancelled some of the jobs afterwards manually in the “systemoverview-tab” because they never produced any output. For this reason some are ‘cancelled’ in the screenshot!

In the dashboard-tab it seems that the Processor never really starts:

But all the sub-directories are createad in my Folder (~/orchestrator_temp/) including one xml-file in the Folder ‘product-formatter’ which contains the names of the scenes which should be considered for the L3-Product.

When I start a job manually in the dashboard and the restart the sen2agri-orchestrator.service in terminal i get the following message:

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: Reading settings from /etc/sen2agri/sen2agri.conf

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: GetProcessorDescriptions took 3 ms

Apr 27 11:13:05 vm-sen2agri systemd[1]: Started Orchestrator for Sen2Agri.

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: Scheduler L3A: Getting season dates for site 5 for scheduled date Wed Mar 1 00:00:00 2017!

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: GetSiteSeasons took 4 ms

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: Scheduler L3A: Extracted season dates: Start: Wed Mar 1 00:00:00 2017, End: Sun Dec 31 00:00:00 2017!

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: GetNewEvents took 3 ms

Apr 27 11:13:05 vm-sen2agri sen2agri-orchestrator[1309]: GetConfigurationParameters took 115 ms

Apr 27 11:13:06 vm-sen2agri sen2agri-orchestrator[1309]: GetProducts took 39 ms

Apr 27 11:13:06 vm-sen2agri sen2agri-orchestrator[1309]: Scheduled job for L3A and site ID 5 with start date Wed Mar 1 00:00:00 2017 and end date Fri Feb 24 00:00:00 2017 will not be executed (no products)!

But the at least 70% of the L2A-products for the site are available…

Normally it shouldn’t be necessary to wait for all L2A-products or?

Same problem with L3B & L3E tasks…

I also tried to submit a ‘custom job’ in the GUI and it’s getting accepted but not starting with the same message in the terminal (Job will not be executed (no products!)

For this option I was not sure but I need to put the start date of my seasonm for the option ‘Syntesis date’ or something else?

May you know whatelse I could try?

Martin

maybe the logmsgs of the sen2agr-executor or scheduler provide a hint for somebody:

scheduler:

May 03 10:14:44 vm-sen2agri sen2agri-scheduler[883]: GetScheduledTasks took 4 ms

May 03 10:14:44 vm-sen2agri sen2agri-scheduler[883]: UpdateScheduledTasksStatus took 29 ms

May 03 10:14:44 vm-sen2agri sen2agri-scheduler[883]: The job for processor: 2, siteId: 2 cannot be started now as is invalid

executor:

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: MarkStepPendingStart took 59 ms

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: GetProcessorDescriptions took 2 ms

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: GetConfigurationParameters took 4 ms

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: GetProcessorDescriptions took 3 ms

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: GetConfigurationParameters took 4 ms

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: HandleStartProcessor: Executing command srun with params --qos qoscomposite --job-name TSKID_24444_STEPNAME_MaskHandler_0 /usr/bin/sen2agri-processor-wrapper SRV_IP_ADDR=127.0.0.1 SRV_PORT_NO=7777 WRP_SEND_RETRIES_NO=3600 WRP_TIMEOUT_BETWEEN_RETRIES=1000 WRP_EXECUTES_LOCAL=1 JOB_NAME=TSKID_24444_STEPNAME_MaskHandler_0 PROC_PATH=/usr/bin/otbcli PROC_PARAMS MaskHandler -xml /mnt/archive/maccs_def/at/l2a/S2A_MSIL2A_20170118T095321_N0204_R079_T33TXN_20170118T095324.SAFE/S2A_OPER_SSC_L2VALD_33TXN____20170118.HDR -out /mnt/archive/orchestrator_temp/l3a/89/24444-composite-mask-handler/all_masks_file.tif -sentinelres 10

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: HandleStartProcessor: Executing command sbatch with params --job-name TSKID_24444_STEPNAME_MaskHandler_0 --qos qoscomposite /tmp/sen2agri-executor.y28129

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: HandleStartProcessor: Sbatch command returned: "Submitted batch job 257

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: "

May 03 10:27:15 vm-sen2agri sen2agri-executor[28129]: MarkStepPendingStart took 28 ms

I really don’t get the problem.

Thanks.#

Martin

Hello,

From what I see a processor L3A was successfully started and running (could you check either in the sen2agri-executor journalctl log or in the Monitoring tab that steps are executed successfully as these logs seem incomplete?).

Also please note that even if you start multiple L3A products in the same time, the system (actually SLURM) is executing only one step at a time. If you have a powerful machine with several processors (> 8 or > 16) you can increase the qoscomposite or qoslai limit in slurm for multiple concurrent executions.

Hope this helps,

Cosmin

Hi Cosmin,

attached the logmsgs of the last days.

executor_log.txt (2.9 MB)

It seems like the Jobs always are submitted but never really start to produce some output.

The monitoring tab looks like this:

Sometimes downloads are appearing which are already finished.

The output links do not show any errors.

In the directory “~/orchestrator_temp” all subfolders and one xml-file (~l3a/51/product-fromatter/executionInfos.xml) is created.

All other subfolders (e.g.: 1-composite-mask-handler, 2-composite-preprocessing, …) are empty!

I’ll try to execute the commands manually in the console now.

Do you think it could help to update the version from 1.8 to 1.8.1?

I use 16 cores for processing.

Martin

Hi Martin,

I think updating to version 1.8.1 will not bring you no change in this problem unless you need to create L3C and L3D products from the L3B products.

From what I see, the tasks for the processors are created OK but apparently there might be a problem with SLURM rather than with the Sen2Agri system components.

Could you first try run (for testing):

srun ls

Normally, this should list you the files in the current folder.

If successful, you can try the command :

sudo sacct --parsable > sacct_results.txt

and also:

sudo sacctmgr list qos format=Account,Name,GrpJobs --parsable

Also, the sen2agri-executor application is trying to communicate with the tasks using (by default) the TCP port 7777 (configurable in the config table). Could you :

Best regards,

Cosmin

Hi,

ok running srun ls return following msg:

[root@vm-sen2agri ~]# srun ls

* srun: Required node not available (down, drained or reserved)

* srun: job 2548 queued and waiting for resources

here the slurm-status may it somehow helps:

[root@vm-sen2agri ~]# systemctl status slurm.service

● slurm.service - LSB: slurm daemon management

Loaded: loaded (/etc/rc.d/init.d/slurm; bad; vendor preset: disabled)

Active: active (running) since Fri 2018-04-27 20:46:41 CEST; 1 weeks 4 days ago

Docs: man:systemd-sysv-generator(8)

Process: 1286 ExecStart=/etc/rc.d/init.d/slurm start (code=exited, status=0/SUCCESS)

Main PID: 1288 (slurmctld)

CGroup: /system.slice/slurm.service

‣ 1288 /usr/sbin/slurmctld

Warning: Journal has been rotated since unit was started. Log output is incomplete or unavailable.

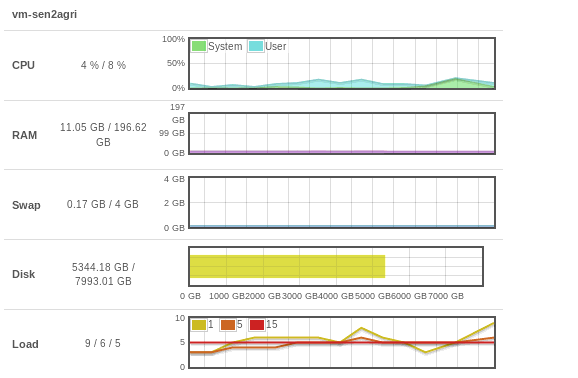

There should be enough processing power available:

Thanks!

Hi, please try:

$ systemctl status slurmd slurmctld slurmdbd

$ systemctl stop slurm slurmd slurmctld slurmdbd

$ systemctl restart slurmd slurmctld slurmdbd

$ systemctl status slurmd slurmctld slurmdbd

$ srun ls

Hi Inicola,

after restarting the system I get the same msg as before:

[root@vm-sen2agri ~]# srun ls

srun: Required node not available (down, drained or reserved)

srun: job 2554 queued and waiting for resources

I wait already more than 15mins but there is no response.

But I can execute

sudo sacct --parsable

output:

sacct_results.txt (200.1 KB)

Martin

Hi Martin,

Can you run the commands above and paste here their output?

[root@vm-sen2agri ~]# systemctl status slurmd slurmctld slurmdbd

● slurmd.service - Slurm node daemon

Loaded: loaded (/usr/lib/systemd/system/slurmd.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/slurmd.service.d

└─override.conf

Active: active (running) since Wed 2018-05-09 16:23:17 CEST; 20min ago

Process: 22606 ExecStart=/usr/sbin/slurmd $SLURMD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 22765 (slurmd)

CGroup: /system.slice/slurmd.service

└─22765 /usr/sbin/slurmd

May 09 16:23:17 vm-sen2agri systemd[1]: Starting Slurm node daemon...

May 09 16:23:17 vm-sen2agri systemd[1]: PID file /var/run/slurmd.pid not rea....

May 09 16:23:17 vm-sen2agri systemd[1]: Started Slurm node daemon.

● slurmctld.service - Slurm controller daemon

Loaded: loaded (/usr/lib/systemd/system/slurmctld.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-05-09 16:23:17 CEST; 20min ago

Process: 22607 ExecStart=/usr/sbin/slurmctld $SLURMCTLD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 22611 (slurmctld)

CGroup: /system.slice/slurmctld.service

└─22611 /usr/sbin/slurmctld

May 09 16:23:17 vm-sen2agri systemd[1]: Starting Slurm controller daemon...

May 09 16:23:17 vm-sen2agri systemd[1]: PID file /var/run/slurmctld.pid not ....

May 09 16:23:17 vm-sen2agri systemd[1]: Started Slurm controller daemon.

● slurmdbd.service - Slurm DBD accounting daemon

Loaded: loaded (/usr/lib/systemd/system/slurmdbd.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-05-09 16:23:17 CEST; 20min ago

Process: 22608 ExecStart=/usr/sbin/slurmdbd $SLURMDBD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 22656 (slurmdbd)

CGroup: /system.slice/slurmdbd.service

└─22656 /usr/sbin/slurmdbd

May 09 16:23:17 vm-sen2agri systemd[1]: Starting Slurm DBD accounting daemon...

May 09 16:23:17 vm-sen2agri systemd[1]: Started Slurm DBD accounting daemon.

Hint: Some lines were ellipsized, use -l to show in full.

[root@vm-sen2agri ~]# systemctl stop slurm slurmd slurmctld slurmdbd

[root@vm-sen2agri ~]# systemctl restart slurmd slurmctld slurmdbd

[root@vm-sen2agri ~]# systemctl status slurmd slurmctld slurmdbd

● slurmd.service - Slurm node daemon

Loaded: loaded (/usr/lib/systemd/system/slurmd.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/slurmd.service.d

└─override.conf

Active: active (running) since Wed 2018-05-09 16:43:52 CEST; 9ms ago

Process: 20241 ExecStart=/usr/sbin/slurmd $SLURMD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 20290 (slurmd)

CGroup: /system.slice/slurmd.service

└─20290 /usr/sbin/slurmd

May 09 16:43:51 vm-sen2agri systemd[1]: Starting Slurm node daemon...

May 09 16:43:52 vm-sen2agri systemd[1]: Started Slurm node daemon.

● slurmctld.service - Slurm controller daemon

Loaded: loaded (/usr/lib/systemd/system/slurmctld.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-05-09 16:43:51 CEST; 305ms ago

Process: 20242 ExecStart=/usr/sbin/slurmctld $SLURMCTLD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 20247 (slurmctld)

CGroup: /system.slice/slurmctld.service

└─20247 /usr/sbin/slurmctld

May 09 16:43:51 vm-sen2agri systemd[1]: Starting Slurm controller daemon...

May 09 16:43:51 vm-sen2agri systemd[1]: PID file /var/run/slurmctld.pid not ....

May 09 16:43:51 vm-sen2agri systemd[1]: Started Slurm controller daemon.

● slurmdbd.service - Slurm DBD accounting daemon

Loaded: loaded (/usr/lib/systemd/system/slurmdbd.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-05-09 16:43:52 CEST; 193ms ago

Process: 20244 ExecStart=/usr/sbin/slurmdbd $SLURMDBD_OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 20258 (slurmdbd)

CGroup: /system.slice/slurmdbd.service

└─20258 /usr/sbin/slurmdbd

May 09 16:43:51 vm-sen2agri systemd[1]: Starting Slurm DBD accounting daemon...

May 09 16:43:52 vm-sen2agri systemd[1]: Started Slurm DBD accounting daemon.

Hint: Some lines were ellipsized, use -l to show in full.

[root@vm-sen2agri ~]# srun ls

srun: Required node not available (down, drained or reserved)

srun: job 2556 queued and waitingOkay. Can you also attach /etc/slurm/slurm.conf and the contents of /var/log/slurm?

The contents of that might tell if there’s an issue.

slurmd.log

[2018-04-19T10:31:47.548] Message aggregation disabled

[2018-04-19T10:31:47.548] CPU frequency setting not configured for this node

[2018-04-19T10:31:47.548] Resource spec: Reserved system memory limit not confi$

[2018-04-19T10:31:47.550] slurmd version 15.08.7 started

[2018-04-19T10:31:47.551] slurmd started on Thu, 19 Apr 2018 10:31:47 +0200

[2018-04-19T10:31:47.555] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=$

[2018-04-27T20:43:20.775] Slurmd shutdown completing

[2018-04-27T20:46:41.815] Message aggregation disabled

[2018-04-27T20:46:41.845] CPU frequency setting not configured for this node

[2018-04-27T20:46:41.845] Resource spec: Reserved system memory limit not confi$

[2018-04-27T20:46:41.865] slurmd version 15.08.7 started

[2018-04-27T20:46:41.929] slurmd started on Fri, 27 Apr 2018 20:46:41 +0200

[2018-04-27T20:46:41.930] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=$

[2018-04-27T20:46:41.992] Message aggregation disabled

[2018-04-27T20:46:41.992] CPU frequency setting not configured for this node

[2018-04-27T20:46:41.992] Resource spec: Reserved system memory limit not confi$

[2018-04-27T20:46:41.995] slurmd version 15.08.7 started

[2018-04-27T20:46:41.995] killing old slurmd[1457]

[2018-04-27T20:46:41.997] slurmd started on Fri, 27 Apr 2018 20:46:41 +0200

slurm dbd.log

[2018-04-27T20:46:41.668] error: mysql_real_connect failed: 2002 Can't connect $

[2018-04-27T20:46:41.668] error: The database must be up when starting the MYSQ$

[2018-04-27T20:46:46.948] Accounting storage MYSQL plugin loaded

[2018-04-27T20:46:46.960] slurmdbd version 15.08.7 started

[2018-05-09T16:16:41.038] Terminate signal (SIGINT or SIGTERM) received

[2018-05-09T16:16:41.837] Unable to remove pidfile '/var/run/slurmdbd.pid': Per$

[2018-05-09T16:18:00.246] Accounting storage MYSQL plugin loaded

[2018-05-09T16:18:00.257] slurmdbd version 15.08.7 started

[2018-05-09T16:21:30.933] Terminate signal (SIGINT or SIGTERM) received

[2018-05-09T16:21:31.434] Unable to remove pidfile '/var/run/slurmdbd.pid': Per$

[2018-05-09T16:23:17.478] Accounting storage MYSQL plugin loaded

[2018-05-09T16:23:17.486] slurmdbd version 15.08.7 started

[2018-05-09T16:43:50.844] Terminate signal (SIGINT or SIGTERM) received

[2018-05-09T16:43:51.345] Unable to remove pidfile '/var/run/slurmdbd.pid': Per$

[2018-05-09T16:43:52.049] Accounting storage MYSQL plugin loaded

slurm.log:

[2018-04-19T10:31:47.070] slurmctld version 15.08.7 started on cluster sen2agri

[2018-04-19T10:31:47.479] layouts: no layout to initialize

[2018-04-19T10:31:47.486] layouts: loading entities/relations information

[2018-04-19T10:31:47.486] error: Could not open node state file /var/spool/slur$

[2018-04-19T10:31:47.486] error: NOTE: Trying backup state save file. Informati$

[2018-04-19T10:31:47.486] No node state file (/var/spool/slurm/node_state.old) $

[2018-04-19T10:31:47.486] error: Incomplete node data checkpoint file

[2018-04-19T10:31:47.486] Recovered state of 0 nodes

[2018-04-19T10:31:47.486] error: Could not open job state file /var/spool/slurm$

[2018-04-19T10:31:47.486] error: NOTE: Trying backup state save file. Jobs may $

[2018-04-19T10:31:47.486] No job state file (/var/spool/slurm/job_state.old) to$

[2018-04-19T10:31:47.486] cons_res: select_p_node_init

[2018-04-19T10:31:47.486] cons_res: preparing for 2 partitions

[2018-04-19T10:31:47.487] error: Could not open reservation state file /var/spo$

[2018-04-19T10:31:47.487] error: NOTE: Trying backup state save file. Reservati$

[2018-04-19T10:31:47.487] No reservation state file (/var/spool/slurm/resv_stat$

[2018-04-19T10:31:47.487] Recovered state of 0 reservations

[2018-04-19T10:31:47.487] error: Could not open trigger state file /var/spool/s$

[2018-04-19T10:31:47.487] error: NOTE: Trying backup state save file. Triggers $

[2018-05-09T16:43:52.055] slurmdbd version 15.08.7 startedAnd also:

$ scontrol show node

$ sinfo

Your slurmd.log seems incomplete. Does it really end on the 27th of April?

sry here again slurmd.log

[2018-04-19T10:31:47.548] Message aggregation disabled

[2018-04-19T10:31:47.548] CPU frequency setting not configured for this node

[2018-04-19T10:31:47.548] Resource spec: Reserved system memory limit not configured for this node

[2018-04-19T10:31:47.550] slurmd version 15.08.7 started

[2018-04-19T10:31:47.551] slurmd started on Thu, 19 Apr 2018 10:31:47 +0200

[2018-04-19T10:31:47.555] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=201341 TmpDisk=8184839 Uptime=39056 CPUSpecList=(null)

[2018-04-27T20:43:20.775] Slurmd shutdown completing

[2018-04-27T20:46:41.815] Message aggregation disabled

[2018-04-27T20:46:41.845] CPU frequency setting not configured for this node

[2018-04-27T20:46:41.845] Resource spec: Reserved system memory limit not configured for this node

[2018-04-27T20:46:41.865] slurmd version 15.08.7 started

[2018-04-27T20:46:41.929] slurmd started on Fri, 27 Apr 2018 20:46:41 +0200

[2018-04-27T20:46:41.930] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=201341 TmpDisk=8184839 Uptime=22 CPUSpecList=(null)

[2018-04-27T20:46:41.992] Message aggregation disabled

[2018-04-27T20:46:41.992] CPU frequency setting not configured for this node

[2018-04-27T20:46:41.992] Resource spec: Reserved system memory limit not configured for this node

[2018-04-27T20:46:41.995] slurmd version 15.08.7 started

[2018-04-27T20:46:41.995] killing old slurmd[1457]

[2018-04-27T20:46:41.997] slurmd started on Fri, 27 Apr 2018 20:46:41 +0200

[2018-04-27T20:46:41.999] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=201341 TmpDisk=8184839 Uptime=22 CPUSpecList=(null)

[2018-04-27T20:46:42.943] Slurmd shutdown completing

[2018-05-09T16:16:42.939] Slurmd shutdown completing

[2018-05-09T16:17:26.896] Message aggregation disabled

[2018-05-09T16:17:27.025] CPU frequency setting not configured for this node

[2018-05-09T16:17:27.025] Resource spec: Reserved system memory limit not configured for this node

[2018-05-09T16:17:27.028] slurmd version 15.08.7 started

[2018-05-09T16:17:27.030] slurmd started on Wed, 09 May 2018 16:17:27 +0200

[2018-05-09T16:17:27.032] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=201341 TmpDisk=8184839 Uptime=1020667 CPUSpecList=(null)

[2018-05-09T16:17:36.134] error: Unable to register: Unable to contact slurm controller (connect failure)

[2018-05-09T16:17:46.138] error: Unable to register: Unable to contact slurm controller (connect failure)

[2018-05-09T16:17:56.142] error: Unable to register: Unable to contact slurm controller (connect failure)

[2018-05-09T16:21:30.933] Slurmd shutdown completing

[2018-05-09T16:23:17.681] Message aggregation disabled

[2018-05-09T16:23:17.682] CPU frequency setting not configured for this node

[2018-05-09T16:23:17.682] Resource spec: Reserved system memory limit not configured for this node

[2018-05-09T16:23:17.684] slurmd version 15.08.7 started

[2018-05-09T16:23:17.685] slurmd started on Wed, 09 May 2018 16:23:17 +0200

[2018-05-09T16:23:17.685] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=201341 TmpDisk=8184839 Uptime=1021018 CPUSpecList=(null)

[2018-05-09T16:43:50.846] Slurmd shutdown completing

[2018-05-09T16:43:52.217] Message aggregation disabled

[2018-05-09T16:43:52.218] CPU frequency setting not configured for this node

[2018-05-09T16:43:52.218] Resource spec: Reserved system memory limit not configured for this node

[2018-05-09T16:43:52.220] slurmd version 15.08.7 started

[2018-05-09T16:43:52.221] slurmd started on Wed, 09 May 2018 16:43:52 +0200

[2018-05-09T16:43:52.221] CPUs=32 Boards=1 Sockets=2 Cores=16 Threads=1 Memory=201341 TmpDisk=8184839 Uptime=1022252 CPUSpecList=(null)

show node:

[root@vm-sen2agri slurm]# scontrol show node

NodeName=localhost Arch=x86_64 CoresPerSocket=1

CPUAlloc=0 CPUErr=0 CPUTot=64 CPULoad=6.10 Features=(null)

Gres=(null)

NodeAddr=localhost NodeHostName=localhost Version=15.08

OS=Linux RealMemory=1 AllocMem=0 FreeMem=591 Sockets=64 Boards=1

State=IDLE+DRAIN ThreadsPerCore=1 TmpDisk=0 Weight=1 Owner=N/A

BootTime=2018-04-27T20:46:19 SlurmdStartTime=2018-05-09T16:43:52

CapWatts=n/a

CurrentWatts=0 LowestJoules=0 ConsumedJoules=0

ExtSensorsJoules=n/s ExtSensorsWatts=0 ExtSensorsTemp=n/s

Reason=Low socket*core*thread count, Low CPUs [slurm@2018-04-19T10:31:48]

sinfo:

[root@vm-sen2agri slurm]# sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

sen2agri* up infinite 1 drain localhost

sen2agriHi up infinite 1 drain localhostSo it looks like SLURM is trying to drain and disable the node. I think you can see the reason with sinfo -R. You should also check if there is enough free disk space on the root partition (df -h), just in case.

You can try to resume it with scontrol update NodeName=localhost State=RESUME.

Hi,

thanks a lot for your help! L3 Processors started now to run!

But whenever I restart slurm I have to resume the node using scontorl

Martin